Apple’s AI is coming: what to expect and will there be a Siri successor?

AI-powered smarts from the Cupertino tech giant are on the way. But just what will it end up looking like when it arrives?

Now that Vision Pro is available and the Apple Car has officially been scrapped, what’s next for the Cupertino-based tech giant? If you’ve been keeping up with the latest, you’ll know that all the big players are making a splash in AI. But Apple has been notably absent from the space. What gives? Well, as per usual, the brand is keeping its cards close to its chest. Per a few recent teasers, there’s an Apple AI in the works and we should get to see it soon.

At Apple’s most recent shareholders meeting, Tim Cook said that the company is going to “break new ground” in the field of generative AI, and that it’s coming this year. In interviews and other calls, Apple has teased that it’s working on AI in some capacity. But just what might this look like, and when will we see it?

- Read more: Apple in 2024: what Stuff wants to see

Is Apple’s AI a Siri successor?

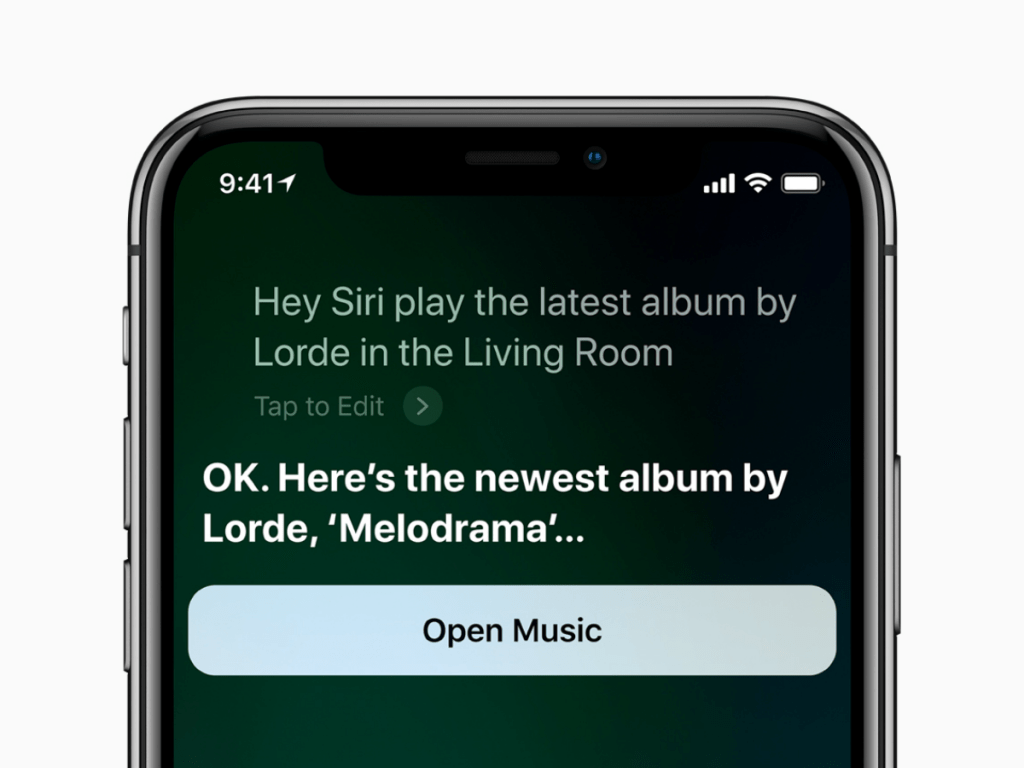

The most obvious place to start with Apple’s AI is Siri. The smart assistant has been lagging behind competitors for a while now, especially Google Assistant. Using AI to better train Siri to give more natural responses and understand requests better would go a long way to improving the assistant. But we think Apple’s going to go further.

Remember the “break new ground” in generative AI line? Perhaps Apple’s working on baking AI straight into Siri. OpenAI’s ChatGPT chatbot is one of the most popular around, and it recently launched a voice assistant version. Even Google’s Gemini chatbot has a voice assistant, which can actually replace the Google Assistant on Android smartphones. But Apple wouldn’t want you to download an app – that would be far too inelegant.

We reckon that Apple’s AI is going to come in a giant Siri overhaul. Thanks to training models, not only will Siri be able to understand and respond better. Although, handling two things at once would be a dream. With generative AI built in, Siri would be able to come up with answers on the spot. It would do a much better job at searching the web, too, as well as crafting a response to just about anything you can chuck at it.

According to an Apple research paper, the company has an AI model that will be able to understand app screens and the context behind it. The paper doesn’t go into details here, but this sort of feature could allow Siri to understand what an app is doing, and handle the request for you. For example, if it can see how the Uber app works, it might be able to call a ride for you.

There’ve been rumblings about Apple working on a search engine for years now, thanks to hiring “search” experts. But what if they were working on this AI version of Siri? AI chatbots are already gunning to replace search engines. So combining one into Siri would go a long way to eliminating the need to typing anything in the search bar. It would put Apple at the front of the pack for AI, and in front of the most faces.

Does Apple have the tech?

Here’s one that’ll really get you. Throughout 2023, Apple bought 32 separate AI companies to get their technology. The latest purchase was DarwinAI in mid-March 2024. And what’s the company’s MO? Making AI systems smaller and faster. You know, exactly the type of thing Apple would want to do to squeeze an AI-powered Siri on the iPhone…

If that wasn’t enough, the Cupertino tech giant quietly rolled out its own LLM model. Dubbed MM1, this Apple AI model is multimodal, meaning it can do different things. It’s designed to caption images, answer visual questions, and for natural language inference. While unconfirmed, it looks to be trained in a smaller set of specific tasks. But what it can handle, it will tackle really well. Researchers have described it as “state-of-the-art”, and noted that it beats other LLMs in benchmarks.

At the same time, Bloomberg revealed that Apple and Google are working a deal to embed Google’s own Gemini AI into the iPhone. Details about this deal are scarce, but the deal would allow Apple to license the AI assistant to be used on the iPhone. It’s also unclear exactly in what capacity. Some rumours suggest Apple’s own LLM isn’t as good, so they’re outsourcing help. Other rumours suggest Gemini will go for broader queries – the things Apple’s specialised LLM is trained on – and it will be a combo of both. We think the latter sounds more likely. Perhaps Apple could even be using both for everything at the same time, to compare results for a more accurate representation.

More recently, Apple revealed it has whipped up ReALM, a shiny new AI model that promises to turbocharge Siri’s understanding of our ramblings into something resembling actual comprehension. And it’s supposed to outperform ChatGPT. It’s all about getting Siri to grasp the “this and that” in conversations by translating what’s on your screen into something it can work with, making interactions more natural and less frustrating. This means less repeating yourself and more actually getting stuff done. The upshot? An Apple AI voice assistant that’s not just smart but genuinely helpful.

Expect plenty of more subtle features

That’s a pretty big swing for Apple’s AI, but you can expect some more subtle features to make their way into iOS, and across other products. New browser on the block, Arc, uses AI to summarise webpages for you. Apple always enjoys giving Safari a big overhaul, and this would go a long way to boosting its status as a game-changing browser.

Keynote, Pages, and Numbers could all get similar AI powers. Microsoft’s Copilot lives in Word, et al, giving users access to an assistant that can help compose and format documents. Google even has the same for Docs, Slides, and the rest. Since Apple pretty much made its productivity apps focused on consumers, some AI assistance makes perfect sense.

Emails and texts would likely get smart replies, similarly to what you can do with Gmail. AI would understand the context of what’s been sent to you, and help pre-craft replies you can use as a template. Even Spotify uses AI now, offering a DJ that can mix up personalised playlists on the spot (even with a host). Could we see something similar come to Apple Music?

While Apple has avoided explicitly using the term “AI” until now, the latest MacBook Air with M3 launched with the tag line of “the world’s best consumer laptop for AI”. While there aren’t many AI features baked into macOS right now, we’re guessing that this is hinting at some big new features that will be introduced at WWDC. At the very least, it references the capabilities of Apple’s hardware for AI processing, which is significant for any future features.

These are all guesses at features Apple could introduce based on others. Obviously, we aren’t sure what the tech giant will do, but these could give us a good indication. We can expect more subtle software refinements as well, such as improved dictation, predictive text, and more. The goal behind adding AI to software is to make things simpler for the end user – and that’s kind of Apple’s MO.

When do we actually get to see it?

Per most major software developments, you can expect to hear about Apple’s AI dabblings at the WWDC developer conference in June. It’s the same event where Apple unveils all of its latest software. So, it would make the most sense for the tech giant to start rolling these AI features out in the next major software releases.

We can expect to see iOS/iPadOS 18, watchOS 11, and macOS 15 in the summer. If Apple is launching any big AI features, we’re almost certain that we’ll see them in the latest software.